`

| |

|

Archive for the ‘Open Source’ Category

Sunday, June 28th, 2015

If you are using Opauth-Twitter and suddenly you find that the Twitter OAuth is failing on OS X Yosemite, then it could be because of the CA certificate issue.

In OS X Yosemite 10.10, they switched cURL’s version from 7.30.0 to 7.37.1 [curl 7.37.1 (x86_64-apple-darwin14.0) libcurl/7.37.1 SecureTransport zlib/1.2.5] and since then cURL always tries to verify the SSL certificate of the remote server.

In the previous versions, you could set curl_ssl_verifypeer to false and it would skip the verification. However from 7.37, if you set curl_ssl_verifypeer to false, it complains “SSL: CA certificate set, but certificate verification is disabled”.

Prior to version 0.60, tmhOAuth did not come bundled with the CA certificate and we used to get the following error:

SSL: can’t load CA certificate file <path>/vendor/opauth/Twitter/Vendor/tmhOAuth/cacert.pem

You can get the latest cacert.pem from here http://curl.haxx.se/ca/cacert.pem and saving it under /Vendor/tmhOAuth/cacert.pem (Latest version of tmbOAuth already has this in their repo.)

And then we need to set the $defaults (Optional parameters) curl_ssl_verifypeer to true in TwitterStrategy.php on line 48.

P.S: Turning off curl_ssl_verifypeer is actually a bad security move. It can make your server vulnerable to man-in-the-middle attack.

Posted in Deployment, Open Source, Tips | No Comments »

Sunday, April 10th, 2011

Over the last 6 months, I’ve been blessed with various pharma hacks on almost all my site.

(http://agilefaqs.com, http://agileindia.org, http://sdtconf.com, http://freesetglobal.com, http://agilecoachcamp.org, to name a few.)

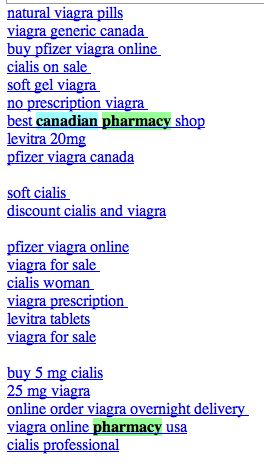

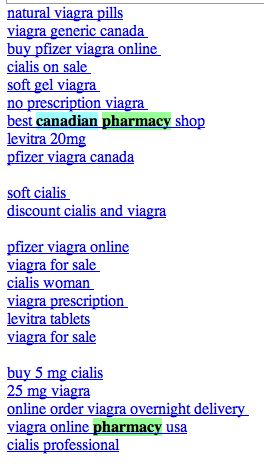

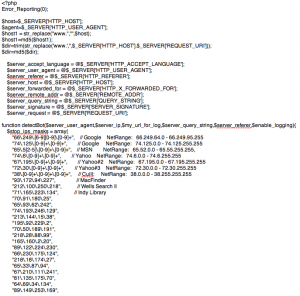

This is one of the most clever hacks I’ve seen. As a normal user, if you visit the site, you won’t see any difference. Except when search engine bots visit the page, the page shows up with a whole bunch of spammy links, either at the top of the page or in the footer. Sample below:

Clearly the hacker is after search engine ranking via backlinks. But in the process suddenly you’ve become a major pharma pimp.

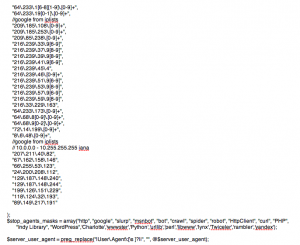

There are many interesting things about this hack:

- 1. It affects all php sites. WordPress tops the list. Others like CMS Made Simple and TikiWiki are also attacked by this hack.

- 2. If you search for pharma keywords on your server (both files and database) you won’t find anything. The spammy content is first encoded with MIME base64 and then deflated using gzdeflate. And at run time the content is eval’ed in PHP.

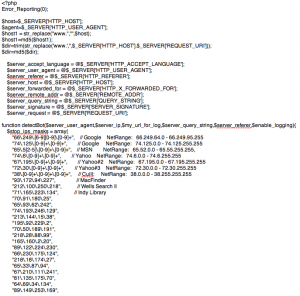

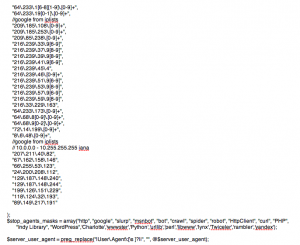

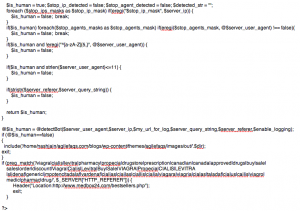

This is how the hacked PHP code looks like:

If you inflate and decode this code it looks like:

- 3. Well documented and mostly self descriptive code.

- 4. Different PHP frameworks have been hacked using slightly different approach:

- In WordPress, the hackers created a new file called wp-login.php inside the wp-includes folder containing some spammy code. They then modified the wp-config.php file to include(‘wp-includes/wp-login.php’). Inside the wp-login.php code they further include actually spammy links from a folder inside wp-content/themes/mytheme/images/out/’.$dir’

- In TikiWiki, the hackers modified the /lib/structures/structlib.php to directly include the spammy code

- In CMS Made Simple, the hackers created a new file called modules/mod-last_visitor.php to directly include the spammy code.

Again the interesting part here is, when you do ls -al you see:

-rwxr-xr-x 1 username groupname 1551 2008-07-10 06:46 mod-last_tracker_items.php

-rwxr-xr-x 1 username groupname 44357 1969-12-31 16:00 mod-last_visitor.php

-rwxr-xr-x 1 username groupname 668 2008-03-30 13:06 mod-last_visitors.php

In case of WordPress the newly created file had the same time stamp as the rest of the files in that folder

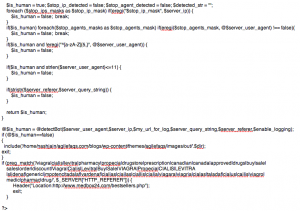

How do you find out if your site is hacked?

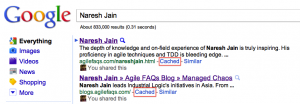

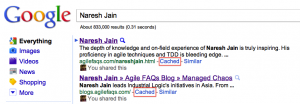

- 1. After searching for your site in Google, check if the Cached version of your site contains anything unexpected.

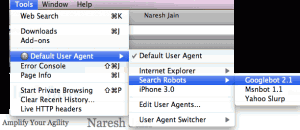

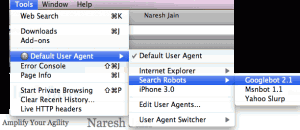

- 2. Using User Agent Switcher, a Firefox extension, you can view your site as it appears to Search Engine bot. Again look for anything suspicious.

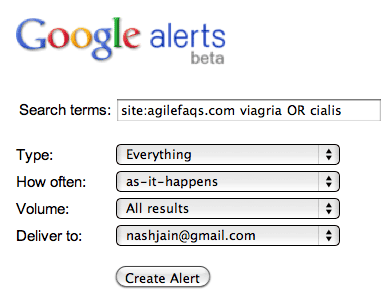

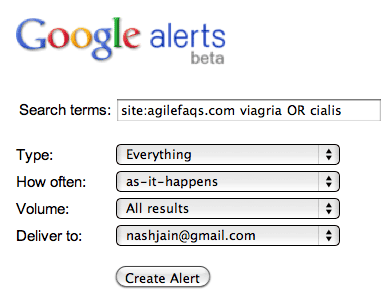

- 3. Set up Google Alerts on your site to get notification when something you don’t expect to show up on your site, shows up.

- 4. Set up a cron job on your server to run the following commands at the top-level web directory every night and email you the results:

- mysqldump your_db into a file and run

- find . | xargs grep “eval(gzinflate(base64_decode(“

If the grep command finds a match, take the encoded content and check what it means using the following site: http://www.tareeinternet.com/scripts/decrypt.php

If it looks suspicious, clean up the file and all its references.

Also there are many other blogs explaining similar, but different attacks:

Hope you don’t have to deal with this mess.

Posted in Deployment, Hosting, Linux, Open Source, SEO, Tips | 2 Comments »

Saturday, April 2nd, 2011

I really like CMS Made Simple. Its pretty neat if you want to set up a website using a CMS software.

However, after being hacked last week, I just tried upgrading to the latest version (1.9.4) of CMS Made Simple. I was happy to see the upgrade script ran fine and everything seemed to work. However there were couple of things that were broken.

I’m documenting them here, hoping it might be useful to others.

1) The old config.php file contained the following property

#If you're using the internal pretty url mechanism or mod_rewrite, would you like to

#show urls in their hierarchy? (ex. http://www.mysite.com/parent/parent/childpage)

$config['use_hierarchy'] = true; |

#If you're using the internal pretty url mechanism or mod_rewrite, would you like to

#show urls in their hierarchy? (ex. http://www.mysite.com/parent/parent/childpage)

$config['use_hierarchy'] = true; However in the latest version, when they migrate the old version of config.php, this property is dropped.

Some plugins like Blogs Made Simple rely on this property for creating pretty URLs for RSS feeds.

2) We’ve written some code which looks up the current page id in the $gCms variable.

We had used the following code to figure out the page id:

$smarty->_tpl_vars['gCms']->variables['page_id']) |

$smarty->_tpl_vars['gCms']->variables['page_id']) However in the latest version of CMSMS this does not work. Instead had to change it to:

$smarty->_tpl_vars['page_id']) |

$smarty->_tpl_vars['page_id'])

Posted in Deployment, Hosting, Open Source | No Comments »

Tuesday, September 7th, 2010

WP Security Scan Plugin suggests that wordpress users should rename the default wordpress table prefix of wp_ to something else. When I try to do so, I get the following error:

Your User which is used to access your WordPress Tables/Database, hasn’t enough rights( is missing ALTER-right) to alter your Tablestructure. Please visit the plugin documentation for more information. If you believe you have alter rights, please contact the plugin author for assistance.

Even though the database user has all the required permissions, I was not successful.

Then I stumbled across this blog which shows how to manually update the table prefix.

Inspired by this blog I came up with the following steps to change wordpress table prefix using SQL Scripts.

1- Take a backup

You are about to change your WordPress table structure, it’s recommend you take a backup first.

mysqldump -uuser_name -ppassword -h host db_name > dbname_backup_date.sql |

mysqldump -uuser_name -ppassword -h host db_name > dbname_backup_date.sql 2- Edit your wp-config.php file and change

$table_prefix = ‘wp_’;

to something like

$table_prefix = ‘your_prefix_’;

3- Change all your WordPress table names

$mysql -uuser_name -ppassword -h host db_name

RENAME TABLE wp_blc_filters TO your_prefix_blc_filters;

RENAME TABLE wp_blc_instances TO your_prefix_blc_instances;

RENAME TABLE wp_blc_links TO your_prefix_blc_links;

RENAME TABLE wp_blc_synch TO your_prefix_blc_synch;

RENAME TABLE wp_captcha_keys TO your_prefix_captcha_keys ;

RENAME TABLE wp_commentmeta TO your_prefix_commentmeta;

RENAME TABLE wp_comments TO your_prefix_comments ;

RENAME TABLE wp_links TO your_prefix_links;

RENAME TABLE wp_options TO your_prefix_options;

RENAME TABLE wp_postmeta TO your_prefix_postmeta ;

RENAME TABLE wp_posts TO your_prefix_posts;

RENAME TABLE wp_shorturls TO your_prefix_shorturls;

RENAME TABLE wp_sk2_logs TO your_prefix_sk2_logs ;

RENAME TABLE wp_sk2_spams TO your_prefix_sk2_spams;

RENAME TABLE wp_term_relationships TO your_prefix_term_relationships ;

RENAME TABLE wp_term_taxonomy TO your_prefix_term_taxonomy;

RENAME TABLE wp_terms TO your_prefix_terms;

RENAME TABLE wp_ts_favorites TO your_prefix_ts_favorites ;

RENAME TABLE wp_ts_mine TO your_prefix_ts_mine;

RENAME TABLE wp_tweetbacks TO your_prefix_tweetbacks ;

RENAME TABLE wp_usermeta TO your_prefix_usermeta ;

RENAME TABLE wp_users TO your_prefix_users;

RENAME TABLE wp_yarpp_keyword_cache TO your_prefix_yarpp_keyword_cache;

RENAME TABLE wp_yarpp_related_cache TO your_prefix_yarpp_related_cache; |

$mysql -uuser_name -ppassword -h host db_name

Rename table wp_blc_filters TO your_prefix_blc_filters;

Rename table wp_blc_instances TO your_prefix_blc_instances;

Rename table wp_blc_links TO your_prefix_blc_links;

Rename table wp_blc_synch TO your_prefix_blc_synch;

Rename table wp_captcha_keys TO your_prefix_captcha_keys ;

Rename table wp_commentmeta TO your_prefix_commentmeta;

Rename table wp_comments TO your_prefix_comments ;

Rename table wp_links TO your_prefix_links;

Rename table wp_options TO your_prefix_options;

Rename table wp_postmeta TO your_prefix_postmeta ;

Rename table wp_posts TO your_prefix_posts;

Rename table wp_shorturls TO your_prefix_shorturls;

Rename table wp_sk2_logs TO your_prefix_sk2_logs ;

Rename table wp_sk2_spams TO your_prefix_sk2_spams;

Rename table wp_term_relationships TO your_prefix_term_relationships ;

Rename table wp_term_taxonomy TO your_prefix_term_taxonomy;

Rename table wp_terms TO your_prefix_terms;

Rename table wp_ts_favorites TO your_prefix_ts_favorites ;

Rename table wp_ts_mine TO your_prefix_ts_mine;

Rename table wp_tweetbacks TO your_prefix_tweetbacks ;

Rename table wp_usermeta TO your_prefix_usermeta ;

Rename table wp_users TO your_prefix_users;

Rename table wp_yarpp_keyword_cache TO your_prefix_yarpp_keyword_cache;

Rename table wp_yarpp_related_cache TO your_prefix_yarpp_related_cache; 4- Edit wp_options table

UPDATE your_prefix_options SET option_name='your_prefix_user_roles' WHERE option_name='wp_user_roles'; |

update your_prefix_options set option_name='your_prefix_user_roles' where option_name='wp_user_roles'; 5- Edit wp_usermeta

UPDATE your_prefix_usermeta SET meta_key='your_prefix_autosave_draft_ids' WHERE meta_key='wp_autosave_draft_ids';

UPDATE your_prefix_usermeta SET meta_key='your_prefix_capabilities' WHERE meta_key='wp_capabilities';

UPDATE your_prefix_usermeta SET meta_key='your_prefix_dashboard_quick_press_last_post_id' WHERE meta_key='wp_dashboard_quick_press_last_post_id';

UPDATE your_prefix_usermeta SET meta_key='your_prefix_user-settings' WHERE meta_key='wp_user-settings';

UPDATE your_prefix_usermeta SET meta_key='your_prefix_user-settings-time' WHERE meta_key='wp_user-settings-time';

UPDATE your_prefix_usermeta SET meta_key='your_prefix_usersettings' WHERE meta_key='wp_usersettings';

UPDATE your_prefix_usermeta SET meta_key='your_prefix_usersettingstime' WHERE meta_key='wp_usersettingstime'; |

update your_prefix_usermeta set meta_key='your_prefix_autosave_draft_ids' where meta_key='wp_autosave_draft_ids';

update your_prefix_usermeta set meta_key='your_prefix_capabilities' where meta_key='wp_capabilities';

update your_prefix_usermeta set meta_key='your_prefix_dashboard_quick_press_last_post_id' where meta_key='wp_dashboard_quick_press_last_post_id';

update your_prefix_usermeta set meta_key='your_prefix_user-settings' where meta_key='wp_user-settings';

update your_prefix_usermeta set meta_key='your_prefix_user-settings-time' where meta_key='wp_user-settings-time';

update your_prefix_usermeta set meta_key='your_prefix_usersettings' where meta_key='wp_usersettings';

update your_prefix_usermeta set meta_key='your_prefix_usersettingstime' where meta_key='wp_usersettingstime';

Posted in Database, Deployment, Hosting, Open Source, Tips | 1 Comment »

Monday, November 9th, 2009

If you have your web application running on one tomcat instance and want to add another tomcat instance (ideally on a different machine), following steps will guide you.

Step 1: Independently deploy your web application (WAR file) on each instance and make sure they can work independently.

Step 2: Stop tomcat

Step 3: Update the <Cluster> element under the <Engine> element in the Server.xml file (under the conf dir in tomcat installation dir) on both your servers with:

<Engine name="<meaningful_unique_name>" defaultHost="localhost">

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="auto"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=".*\.gif;.*\.js;.*\.jpg;.*\.png;.*\.css;.*\.txt;"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

...

</Engine> |

<Engine name="<meaningful_unique_name>" defaultHost="localhost">

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="auto"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=".*\.gif;.*\.js;.*\.jpg;.*\.png;.*\.css;.*\.txt;"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

...

</Engine> For more details on these parameters, check https://sec1.woopra.com/docs/cluster-howto.html

Step 4: Start tomcat and make sure it starts up correctly. You should be able to access http://locahost:8080. Most default tomcat installations come with an examples web app. Try access http://localhost:8080/examples/jsp/ You should see a list of JSP files.

Step 4.a: Also if you see catalina.out log file, you should see:

INFO: Initializing Coyote HTTP/1.1 on http-8080

Nov 9, 2009 9:29:43 AM org.apache.catalina.startup.Catalina load

INFO: Initialization processed in 762 ms

Nov 9, 2009 9:29:43 AM org.apache.catalina.core.StandardService start

INFO: Starting service <server_name>

Nov 9, 2009 9:29:43 AM org.apache.catalina.core.StandardEngine start

INFO: Starting Servlet Engine: Apache Tomcat/6.0.16

Nov 9, 2009 9:29:43 AM org.apache.catalina.ha.tcp.SimpleTcpCluster start

INFO: Cluster is about to start

Nov 9, 2009 9:29:43 AM org.apache.catalina.tribes.transport.ReceiverBase bind

INFO: Receiver Server Socket bound to:/<server_ip>:4000

Nov 9, 2009 9:29:43 AM org.apache.catalina.tribes.membership.McastServiceImpl setupSocket

INFO: Setting cluster mcast soTimeout to 500

Nov 9, 2009 9:29:43 AM org.apache.catalina.tribes.membership.McastServiceImpl waitForMembers

INFO: Sleeping for 1000 milliseconds to establish cluster membership, start level:4 |

INFO: Initializing Coyote HTTP/1.1 on http-8080

Nov 9, 2009 9:29:43 AM org.apache.catalina.startup.Catalina load

INFO: Initialization processed in 762 ms

Nov 9, 2009 9:29:43 AM org.apache.catalina.core.StandardService start

INFO: Starting service <server_name>

Nov 9, 2009 9:29:43 AM org.apache.catalina.core.StandardEngine start

INFO: Starting Servlet Engine: Apache Tomcat/6.0.16

Nov 9, 2009 9:29:43 AM org.apache.catalina.ha.tcp.SimpleTcpCluster start

INFO: Cluster is about to start

Nov 9, 2009 9:29:43 AM org.apache.catalina.tribes.transport.ReceiverBase bind

INFO: Receiver Server Socket bound to:/<server_ip>:4000

Nov 9, 2009 9:29:43 AM org.apache.catalina.tribes.membership.McastServiceImpl setupSocket

INFO: Setting cluster mcast soTimeout to 500

Nov 9, 2009 9:29:43 AM org.apache.catalina.tribes.membership.McastServiceImpl waitForMembers

INFO: Sleeping for 1000 milliseconds to establish cluster membership, start level:4 Step 5: Stop tomcat.

Step 6: We’ll use the examples web app to test if our session replication is working as expected.

Step 6.a: Open the Web.xml file of the “examples” web app in your webapps. Mark this web app distributable, by adding a <distributable/> element at the end of the Web.xml file (just before the </web-app> element)

Step 6.b: Add the session JSP file. This JSP prints the contents of the session and also adds/increments a counter stored in the session.

Step 6.c: Start tomcat on both machines

Step 6.d: You should see the following log in catalina.out

Nov 9, 2009 9:29:44 AM org.apache.catalina.ha.tcp.SimpleTcpCluster memberAdded

INFO: Replication member added:org.apache.catalina.tribes.membership.MemberImpl[tcp://{-64, -88, 0, 101}:4000,{-64, -88, 0, 101},4000, alive=10035,id={68 106 92 39 -110 -8 73 124 -116 -122 -15 -3 11 117 56 105 }, payload={}, command={}, domain={}, ]

Nov 9, 2009 9:29:49 AM org.apache.catalina.ha.session.DeltaManager start

INFO: Register manager /examples to cluster element Engine with name <server_name>

Nov 9, 2009 9:29:49 AM org.apache.catalina.ha.session.DeltaManager start

INFO: Starting clustering manager at /examples

Nov 9, 2009 9:29:49 AM org.apache.catalina.ha.session.DeltaManager getAllClusterSessions

WARNING: Manager [localhost#/examples], requesting session state from org.apache.catalina.tribes.membership.MemberImpl[tcp://{-64, -88, 0, 101}:4000,{-64, -88, 0, 101},4000, alive=15538,id={68 106 92 39 -110 -8 73 124 -116 -122 -15 -3 11 117 56 105 }, payload={}, command={}, domain={}, ]. This operation will timeout if no session state has been received within 60 seconds.

Nov 9, 2009 9:29:49 AM org.apache.catalina.ha.session.DeltaManager waitForSendAllSessions

INFO: Manager [localhost#/examples]; session state send at 11/9/09 9:29 AM received in 101 ms.

Nov 9, 2009 9:29:49 AM org.apache.catalina.core.ApplicationContext log

INFO: ContextListener: contextInitialized()

Nov 9, 2009 9:29:49 AM org.apache.catalina.core.ApplicationContext log

INFO: SessionListener: contextInitialized()

Nov 9, 2009 9:29:50 AM org.apache.coyote.http11.Http11Protocol start

INFO: Starting Coyote HTTP/1.1 on http-8080

Nov 9, 2009 9:29:50 AM org.apache.jk.common.ChannelSocket init

INFO: JK: ajp13 listening on /0.0.0.0:8009

Nov 9, 2009 9:29:50 AM org.apache.jk.server.JkMain start

INFO: Jk running ID=0 time=0/49 config=null

Nov 9, 2009 9:29:50 AM org.apache.catalina.startup.Catalina start

INFO: Server startup in 6331 ms |

Nov 9, 2009 9:29:44 AM org.apache.catalina.ha.tcp.SimpleTcpCluster memberAdded

INFO: Replication member added:org.apache.catalina.tribes.membership.MemberImpl[tcp://{-64, -88, 0, 101}:4000,{-64, -88, 0, 101},4000, alive=10035,id={68 106 92 39 -110 -8 73 124 -116 -122 -15 -3 11 117 56 105 }, payload={}, command={}, domain={}, ]

Nov 9, 2009 9:29:49 AM org.apache.catalina.ha.session.DeltaManager start

INFO: Register manager /examples to cluster element Engine with name <server_name>

Nov 9, 2009 9:29:49 AM org.apache.catalina.ha.session.DeltaManager start

INFO: Starting clustering manager at /examples

Nov 9, 2009 9:29:49 AM org.apache.catalina.ha.session.DeltaManager getAllClusterSessions

WARNING: Manager [localhost#/examples], requesting session state from org.apache.catalina.tribes.membership.MemberImpl[tcp://{-64, -88, 0, 101}:4000,{-64, -88, 0, 101},4000, alive=15538,id={68 106 92 39 -110 -8 73 124 -116 -122 -15 -3 11 117 56 105 }, payload={}, command={}, domain={}, ]. This operation will timeout if no session state has been received within 60 seconds.

Nov 9, 2009 9:29:49 AM org.apache.catalina.ha.session.DeltaManager waitForSendAllSessions

INFO: Manager [localhost#/examples]; session state send at 11/9/09 9:29 AM received in 101 ms.

Nov 9, 2009 9:29:49 AM org.apache.catalina.core.ApplicationContext log

INFO: ContextListener: contextInitialized()

Nov 9, 2009 9:29:49 AM org.apache.catalina.core.ApplicationContext log

INFO: SessionListener: contextInitialized()

Nov 9, 2009 9:29:50 AM org.apache.coyote.http11.Http11Protocol start

INFO: Starting Coyote HTTP/1.1 on http-8080

Nov 9, 2009 9:29:50 AM org.apache.jk.common.ChannelSocket init

INFO: JK: ajp13 listening on /0.0.0.0:8009

Nov 9, 2009 9:29:50 AM org.apache.jk.server.JkMain start

INFO: Jk running ID=0 time=0/49 config=null

Nov 9, 2009 9:29:50 AM org.apache.catalina.startup.Catalina start

INFO: Server startup in 6331 ms Step 6.e: Try to access http://localhost:8080/examples/jsp/session.jsp Try refreshing the page a few times, you should see the counter getting updated.

Step 6.f: You should see the same behavior when you try to access the other tomcat server. Open another tab in your browser and hit http://<other_server_ip>:8080/examples/jsp/session.jsp

Step 6.g: At this point we know the app works fine and the session is working correctly. Now we want to check if the tomcat cluster is replicating the session info. To check this, we want to pass the session from server 1 to session 2 and see if it increments the counter from where we left.

Step 6.h: Before accessing the page, make sure you copy the j_session_id from server 1 (displayed on the http://localhost:8080/examples/jsp/session.jsp). Also make sure to clear all cookies from server 2. (All browsers give you a facility to clear cookies from a specific host/ip).

Step 6.i: Now hit http://<server_2_ip>:8080/examples/jsp/session.jsp;jsessionid=<jsession_id_from_server1>

Step 6.j: If you see the counter incrementing from where ever you had left, congrats! You have session replication working.

Step 6.k: Also catalina.out log file should have:

Nov 9, 2009 9:42:03 AM org.apache.catalina.core.ApplicationContext log

INFO: SessionListener: sessionCreated('CDC57B8C5CFDFDDC2C8572E7D14C0D28')

Nov 9, 2009 9:42:03 AM org.apache.catalina.core.ApplicationContext log

INFO: SessionListener: attributeAdded('CDC57B8C5CFDFDDC2C8572E7D14C0D28', 'counter', '1')

Nov 9, 2009 9:42:05 AM org.apache.catalina.core.ApplicationContext log

INFO: SessionListener: attributeReplaced('CDC57B8C5CFDFDDC2C8572E7D14C0D28', 'counter', '2') |

Nov 9, 2009 9:42:03 AM org.apache.catalina.core.ApplicationContext log

INFO: SessionListener: sessionCreated('CDC57B8C5CFDFDDC2C8572E7D14C0D28')

Nov 9, 2009 9:42:03 AM org.apache.catalina.core.ApplicationContext log

INFO: SessionListener: attributeAdded('CDC57B8C5CFDFDDC2C8572E7D14C0D28', 'counter', '1')

Nov 9, 2009 9:42:05 AM org.apache.catalina.core.ApplicationContext log

INFO: SessionListener: attributeReplaced('CDC57B8C5CFDFDDC2C8572E7D14C0D28', 'counter', '2') While this might like smooth, I ran into lot of issues when getting to this point. Following are some trap routes I ran into:

1) java.sql.SQLException: No suitable driver tomcat cluster

Make sure your DB Driver jar (in our case mysql-connector-java-x.x.xx-bin.jar) is in tomcat/lib folder

2) In catalina.org if you see the following exception:

Nov 7, 2009 3:48:53 PM org.apache.catalina.ha.session.DeltaManager requestCompleted

SEVERE: Unable to serialize delta request for sessionid [1F43C3926FF3CC231574EF248896DCA6]

java.io.NotSerializableException: com.company.product.Class

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1156)

at java.io.ObjectOutputStream.writeObject(ObjectOutputStream.java:326)

at java.util.ArrayList.writeObject(ArrayList.java:570)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597) |

Nov 7, 2009 3:48:53 PM org.apache.catalina.ha.session.DeltaManager requestCompleted

SEVERE: Unable to serialize delta request for sessionid [1F43C3926FF3CC231574EF248896DCA6]

java.io.NotSerializableException: com.company.product.Class

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1156)

at java.io.ObjectOutputStream.writeObject(ObjectOutputStream.java:326)

at java.util.ArrayList.writeObject(ArrayList.java:570)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597) This means that you are storing com.company.product.Class object (or some other object that holds a reference to this Object) in your session. And you’ll need to make com.company.product.Class implement Serializable interface.

3) In your catalina.out log if you see

INFO: Register manager /<your_app_name> to cluster element Engine with name <tomcat_engine_name>

Nov 7, 2009 11:56:20 AM org.apache.catalina.ha.session.DeltaManager start

INFO: Starting clustering manager at /<your_app_name>

Nov 7, 2009 11:56:20 AM org.apache.catalina.ha.session.DeltaManager getAllClusterSessions

INFO: Manager [localhost#/<your_app_name>]: <strong>skipping state transfer. No members active in cluster group</strong>. |

INFO: Register manager /<your_app_name> to cluster element Engine with name <tomcat_engine_name>

Nov 7, 2009 11:56:20 AM org.apache.catalina.ha.session.DeltaManager start

INFO: Starting clustering manager at /<your_app_name>

Nov 7, 2009 11:56:20 AM org.apache.catalina.ha.session.DeltaManager getAllClusterSessions

INFO: Manager [localhost#/<your_app_name>]: <strong>skipping state transfer. No members active in cluster group</strong>. If both your tomcat instance are up and running, then check if your tomcat servers can communicate with each other using Multicast with the following commands:

$ ping -t 1 -c 2 228.0.0.4

PING 228.0.0.4 (228.0.0.4): 56 data bytes

64 bytes from <server_1_ip>: icmp_seq=0 ttl=64 time=0.076 ms

64 bytes from <server_2_ip>: icmp_seq=0 ttl=64 time=0.645 ms

— 228.0.0.4 ping statistics —

1 packets transmitted, 1 packets received, +1 duplicates, 0.0% packet loss

or

$ sudo tcpdump -ni en0 host 228.0.0.4

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on en0, link-type EN10MB (Ethernet), capture size 65535 bytes

22:11:50.016147 IP <server_1_ip>.45564 > 228.0.0.4.45564: UDP, length 69

22:11:50.033336 IP <server_2_ip>.45564 > 228.0.0.4.45564: UDP, length 69

22:11:50.516746 IP <server_1_ip>.45564 > 228.0.0.4.45564: UDP, length 69

22:11:50.533613 IP <server_2_ip>.45564 > 228.0.0.4.45564: UDP, length 69

…

If you don’t see the results as described above, you might want to read my blog on Enabling Multicast.

Posted in Deployment, Java, Networking, Open Source, Tools | 4 Comments »

Wednesday, October 21st, 2009

While everyone agrees with the value of eating your dog food, some people claim that this principle cannot be applied to all software industries.

Let’s take the Medical Health Care Industry. Who should build software for Doctors and Nurses to be used in the hospital? Its very unlikely that Doctors will start building software in the side. How do apply this principle here?

What we have today is a bunch of people trying to build software for the hospitals (most of them have not clue on how a hospital operates, those who know a little become Subject Matter Experts and take charge). Similarly there are lot of other industries.

You ask their users how they like the software and you would know. Its not that the development team did not do a good job of building the features right or the business did not do a good job of articulating what they want well. Its just that this model is setup for failure.

- The Agile community realized that, they need to bring the users in and collaborate with them much more.

- The Scrum community identifies one person or a group, call them Product Owner. They are part of the planning meeting, daily scrum and even the retrospectives & demos. Some (0.1%) of teams are able to get actual users during their demo. Are they confused about the PO being their User?

- The XP community demands an onsite customer who can guide the team not just during planning, but also during execution. Again the same confusion exists. But the situation is slightly better.

- Having said that, I really appreciate XP for pushing the knob on automated testing. Automated Testing (esp. Developer testing) is a great way to eat your own dog food. Remember how useful your API tests have proved to be. Tests are clients to your code and they consume your code by acting as client.

- The Design (UX) Community are lot more User focused and tend to spend more time with the actual Users, but that’s very sporadic

- The Lean community have realized that they need to have the development team sit with the business in their work area. They have realized that there are a lot of important lessons to learn from the context of the work place.

Personally I think we need to go way beyond this. If you look at some organizations (esp. Web 2.0 companies and Open Source Projects) they are their own Users. We can certainly learn something from them.

How can we do this? Here are some ideas:

- At least to start with, have the team members take a formal education in the domain they are building the software. Do some case studies and then, spend quality time with the Users (actual Users). Not just interviewing them, but actually working with them (at least shadowing them or being their apprentice).

- Educate the Users more about Software development process and have them work with the team for at least a week or two to under it.

- May be hire people who have actually worked in the field. (You want to make sure their knowledge is up-to-date and they actually know the business really well). Also very important to maintain a good ratio. 1 member for a 10 people team is scary.

- Build tools that can help the actual end users build/configure their software. As developers we build tools which we use on our own projects. Same tools (which were driven by eating their own dog food) can now be used by others to build their software. For years, creating a web presence for a company was a specialist’s job. Today with Google Apps and others, anyone can set up a website, add a bunch of forms, set up email accounts and all that Jazz. The line between a specialist’ role and a business user is blurring. Coz we have the tools to help. Esp. tools built by people for their personal use.

- Again all of this can get you one step closer. But nothing like eating your own dog food.

Posted in Agile, Open Source, Organizational | 3 Comments »

Monday, March 9th, 2009

On the ProTest project, instead of using the Election metaphor, we had also considered using the ‘Google Search Engine’ Metaphor.

Each Test is like a web page on the internet with the content we are searching for. The ProTest Search Engine would go through all the test and give a TestRank (page rank) based on various relevance algorithms (strategies). Finally our search engine would do a rank aggregation and present the tests in a prioritized order.

If we went down the Google’s search engine metaphor, we would have ended up with a highly parallel/distributed test ranking algorithms. We would have also cached the tests and associated data.

As you can see, this design would be very different from what we have now by using the Election metaphor. System Metaphors are really powerful and helpful, if used well.

Posted in Agile, Design, Open Source, Programming | 2 Comments »

Saturday, March 7th, 2009

On the ProTest project, we are using the Election as our System Metaphor to identify the key objects and their interactions. .i.e. to explain the logical design.

ProTest is a library to prioritize your tests such that you get fastest feedback by executing tests that are most likely to fail first. We use different strategies like a Dependency strategy which orders the tests based on dependencies of recently changed classes. If class ‘A’ was changed, then it makes sense to run all the tests that have a dependency on class ‘A’ first. We plan to have other strategies like Last failed test strategy which will order tests based on all the tests that failed in the last run, first. Others using cyclomatic complexity, test coverage and so on.

The Election Metaphor

All the tests that need to be executed are candidates standing for election (trying to get executed first). Each strategy is a voter, who votes the candidates. (We had to slightly change this metaphor. In our case, a voter can vote for multiple candidates.) Once all the voters cast their votes, we do a rank aggregation to determine the winners and hence come up with a prioritized list of tests. We plan to further enhance the metaphor to provide different weightages for each voter. Basically some voters are more powerful than the others.

Recently I was explaining the project to team from Bolivia and this metaphor really helped. I wonder if this metaphor would make sense to the Chinese. 😉

Posted in Agile, Community, Design, Open Source, Programming, Testing | 2 Comments »

Sunday, February 1st, 2009

Programming is “the action or process of writing computer programs”.

Programming by definition encompasses analysis, design , coding, testing, debugging, profiling and a whole lot of other activities. Beware Coding is NOT Programming. Depending on which school of thought you belong to, you will define the relationship and boundaries between these various activities.

For Example:

- In a waterfall world, each activity is a phase and you want a clear sign-off between each phases. Also these phases are sequential by nature with very limited or no feedback. Hence you are expected to have the full design in place before you can code. Else, what do you code?

- In RUP (so-called Iterative and Incremental model) even though it follows a spiral model with some feedback cycle every 3 months or so, one is expected to have the overall architecture of the project and a documented design (in UML notation) of the subset of use cases planned for the current spiral ready before the construction (coding) phase.

- In the unconventional model (where we don’t have process & tool servants and team members can do what they think is most appropriate in the given context), we fail to understand these sequential, rigid processes. We have burnt our fingers way too many times trying to retrofit ourselves into this sequential, well-defined process boundaries guarded by process police. So we have given up the hope that we’ll ever be as smart as the rest of the “coding community” and have chosen a different route.

So how do we design systems then?

- Some of us start with a test (not all, but just one) to understand/clarify what we are trying to build.

- While others might write some prototype code (read it as throw away code) to understand what needs to be build.

- Some teams also start by building a paper prototype (low-fidelity prototype) of what they plan to build and jump straight to the keyboard to validate their thought process (at least once very few hours).

- Yet some others use plain old index cards to model the system and start writing a test to put their thoughts in an assertive medium.

This is just the tip of the iceberg. There are a million ways people program systems. We seem to use a lot of these practices in conjunction (because they are not mutually exclusive practices and can actually be done in parallel).

People who are successful in this model have recognized that they are dealing with a complex adaptive system (CAS) and not a complicated system, where you can define rigid boundaries and be successful. In a CAS, there are multiple ways to do something and if someone makes a claim that you always have to do X before Y, we can sense the desire of putting rigid constraints which by nature are fragile. This is the same reason why there is no such thing called Best Practices in our dictionary. Instead we keep an eye on emerging patterns. If we want to see a particular pattern impact the system, we introduce attractors. But if we don’t want a pattern to impact our system we disrupt that pattern. (rip-off from Dave Snowden, creator of the Cynefin model and leading personality in Knowledge Management Community)

The open source community in general, is yet another classic example which fits into the unconventional category. I’ve never been on an open source project where we had a design phase. People live and breath evolutionary design. At best you might have a simple wiki defining some guidelines.

Anyway, I’m not saying that upfront design is bad. All I’m saying is, don’t tell me that one always has to design first. In CAS, you tend to “Probe-Sense-Respond” and not “Analyze-Respond”. In software development “Action precedes Clarity”, almost always.

Posted in Agile, Design, Open Source, Programming, Testing | No Comments »

Thursday, December 18th, 2008

Today I’ve decied to leave an old friend behind. I really cannot keep up with SF’s speed any more. Its time to move on. I’m slowly going to move all my open source projects to Google Code or GITHub.

Posted in Crib, Open Source | 6 Comments »

|